Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- README.md +286 -0

- added_tokens.json +28 -0

- chat_template.jinja +110 -0

- chat_template.json +3 -0

- config.json +421 -0

- generation_config.json +14 -0

- merges.txt +0 -0

- model-00001-of-00024.safetensors +3 -0

- model-00002-of-00024.safetensors +3 -0

- model-00003-of-00024.safetensors +3 -0

- model-00004-of-00024.safetensors +3 -0

- model-00005-of-00024.safetensors +3 -0

- model-00006-of-00024.safetensors +3 -0

- model-00007-of-00024.safetensors +3 -0

- model-00008-of-00024.safetensors +3 -0

- model-00009-of-00024.safetensors +3 -0

- model-00010-of-00024.safetensors +3 -0

- model-00011-of-00024.safetensors +3 -0

- model-00012-of-00024.safetensors +3 -0

- model-00013-of-00024.safetensors +3 -0

- model-00014-of-00024.safetensors +3 -0

- model-00015-of-00024.safetensors +3 -0

- model-00016-of-00024.safetensors +3 -0

- model-00017-of-00024.safetensors +3 -0

- model-00018-of-00024.safetensors +3 -0

- model-00019-of-00024.safetensors +3 -0

- model-00020-of-00024.safetensors +3 -0

- model-00021-of-00024.safetensors +3 -0

- model-00022-of-00024.safetensors +3 -0

- model-00023-of-00024.safetensors +3 -0

- model-00024-of-00024.safetensors +3 -0

- model.safetensors.index.json +0 -0

- preprocessor_config.json +39 -0

- special_tokens_map.json +31 -0

- tokenizer.json +3 -0

- tokenizer_config.json +242 -0

- video_preprocessor_config.json +41 -0

- vocab.json +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,286 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- unsloth

|

| 4 |

+

base_model:

|

| 5 |

+

- Qwen/Qwen3-VL-235B-A22B-Thinking-FP8

|

| 6 |

+

license: apache-2.0

|

| 7 |

+

pipeline_tag: image-text-to-text

|

| 8 |

+

---

|

| 9 |

+

> [!NOTE]

|

| 10 |

+

> Includes Unsloth **chat template fixes**! <br> For `llama.cpp`, use `--jinja`

|

| 11 |

+

>

|

| 12 |

+

|

| 13 |

+

<div>

|

| 14 |

+

<p style="margin-top: 0;margin-bottom: 0;">

|

| 15 |

+

<em><a href="https://docs.unsloth.ai/basics/unsloth-dynamic-v2.0-gguf">Unsloth Dynamic 2.0</a> achieves superior accuracy & outperforms other leading quants.</em>

|

| 16 |

+

</p>

|

| 17 |

+

<div style="display: flex; gap: 5px; align-items: center; ">

|

| 18 |

+

<a href="https://github.com/unslothai/unsloth/">

|

| 19 |

+

<img src="https://github.com/unslothai/unsloth/raw/main/images/unsloth%20new%20logo.png" width="133">

|

| 20 |

+

</a>

|

| 21 |

+

<a href="https://discord.gg/unsloth">

|

| 22 |

+

<img src="https://github.com/unslothai/unsloth/raw/main/images/Discord%20button.png" width="173">

|

| 23 |

+

</a>

|

| 24 |

+

<a href="https://docs.unsloth.ai/">

|

| 25 |

+

<img src="https://raw.githubusercontent.com/unslothai/unsloth/refs/heads/main/images/documentation%20green%20button.png" width="143">

|

| 26 |

+

</a>

|

| 27 |

+

</div>

|

| 28 |

+

</div>

|

| 29 |

+

|

| 30 |

+

<a href="https://chat.qwenlm.ai/" target="_blank" style="margin: 2px;">

|

| 31 |

+

<img alt="Chat" src="https://img.shields.io/badge/%F0%9F%92%9C%EF%B8%8F%20Qwen%20Chat%20-536af5" style="display: inline-block; vertical-align: middle;"/>

|

| 32 |

+

</a>

|

| 33 |

+

|

| 34 |

+

# Qwen3-VL-235B-A22B-Thinking-FP8

|

| 35 |

+

|

| 36 |

+

> This repository contains an FP8 quantized version of the [Qwen3-VL-235B-A22B-Thinking](https://huggingface.co/Qwen/Qwen3-VL-235B-A22B-Thinking) model. The quantization method is fine-grained fp8 quantization with block size of 128, and its performance metrics are nearly identical to those of the original BF16 model. Enjoy!

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

Meet Qwen3-VL — the most powerful vision-language model in the Qwen series to date.

|

| 40 |

+

|

| 41 |

+

This generation delivers comprehensive upgrades across the board: superior text understanding & generation, deeper visual perception & reasoning, extended context length, enhanced spatial and video dynamics comprehension, and stronger agent interaction capabilities.

|

| 42 |

+

|

| 43 |

+

Available in Dense and MoE architectures that scale from edge to cloud, with Instruct and reasoning‑enhanced Thinking editions for flexible, on‑demand deployment.

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

#### Key Enhancements:

|

| 47 |

+

|

| 48 |

+

* **Visual Agent**: Operates PC/mobile GUIs—recognizes elements, understands functions, invokes tools, completes tasks.

|

| 49 |

+

|

| 50 |

+

* **Visual Coding Boost**: Generates Draw.io/HTML/CSS/JS from images/videos.

|

| 51 |

+

|

| 52 |

+

* **Advanced Spatial Perception**: Judges object positions, viewpoints, and occlusions; provides stronger 2D grounding and enables 3D grounding for spatial reasoning and embodied AI.

|

| 53 |

+

|

| 54 |

+

* **Long Context & Video Understanding**: Native 256K context, expandable to 1M; handles books and hours-long video with full recall and second-level indexing.

|

| 55 |

+

|

| 56 |

+

* **Enhanced Multimodal Reasoning**: Excels in STEM/Math—causal analysis and logical, evidence-based answers.

|

| 57 |

+

|

| 58 |

+

* **Upgraded Visual Recognition**: Broader, higher-quality pretraining is able to “recognize everything”—celebrities, anime, products, landmarks, flora/fauna, etc.

|

| 59 |

+

|

| 60 |

+

* **Expanded OCR**: Supports 32 languages (up from 19); robust in low light, blur, and tilt; better with rare/ancient characters and jargon; improved long-document structure parsing.

|

| 61 |

+

|

| 62 |

+

* **Text Understanding on par with pure LLMs**: Seamless text–vision fusion for lossless, unified comprehension.

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

#### Model Architecture Updates:

|

| 66 |

+

|

| 67 |

+

<p align="center">

|

| 68 |

+

<img src="https://qianwen-res.oss-accelerate.aliyuncs.com/Qwen3-VL/qwen3vl_arc.jpg" width="80%"/>

|

| 69 |

+

<p>

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

1. **Interleaved-MRoPE**: Full‑frequency allocation over time, width, and height via robust positional embeddings, enhancing long‑horizon video reasoning.

|

| 73 |

+

|

| 74 |

+

2. **DeepStack**: Fuses multi‑level ViT features to capture fine‑grained details and sharpen image–text alignment.

|

| 75 |

+

|

| 76 |

+

3. **Text–Timestamp Alignment:** Moves beyond T‑RoPE to precise, timestamp‑grounded event localization for stronger video temporal modeling.

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

This is the weight repository for the FP8 version of Qwen3-VL-235B-A22B-Thinking.

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

---

|

| 83 |

+

|

| 84 |

+

## Model Performance

|

| 85 |

+

|

| 86 |

+

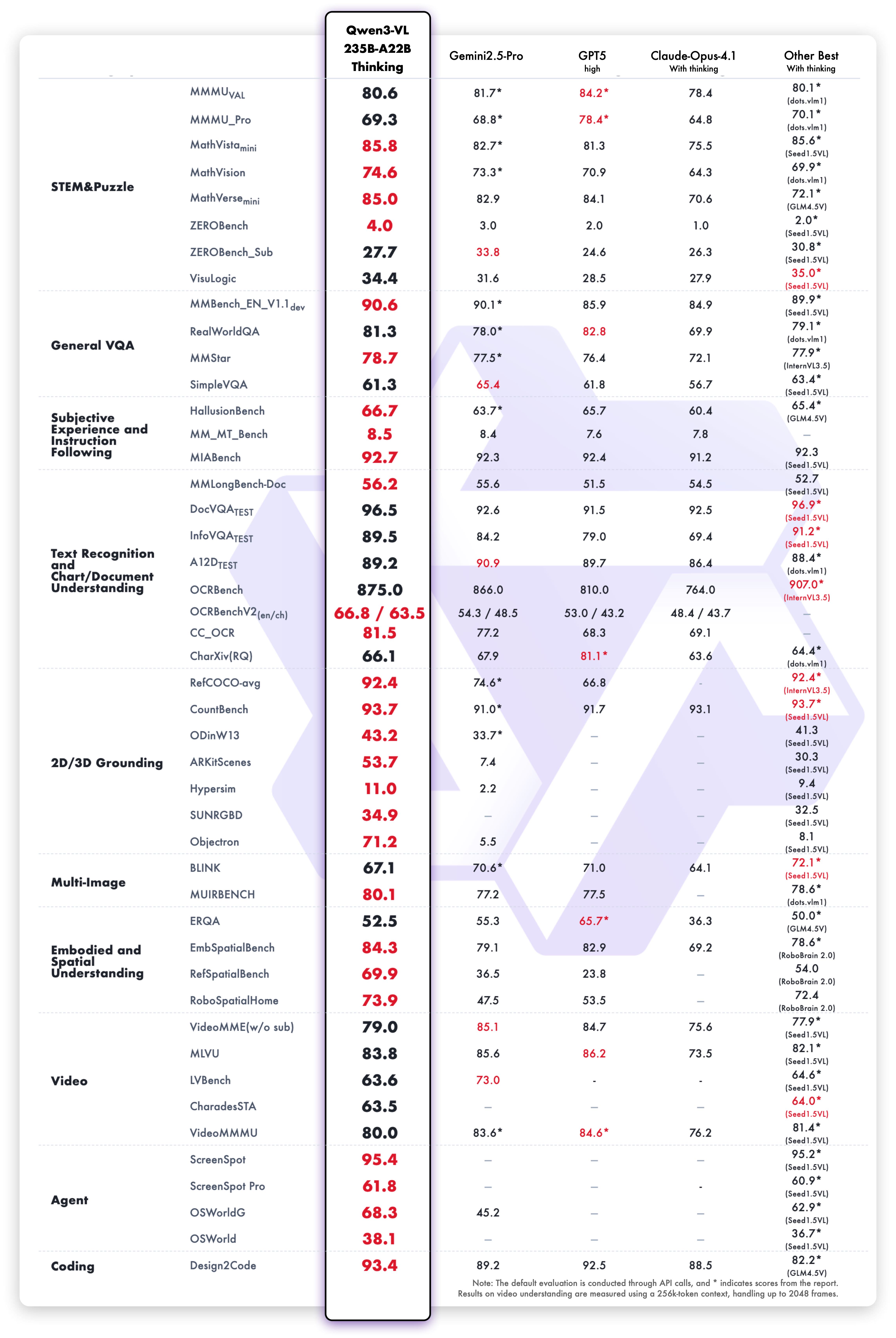

**Multimodal performance**

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

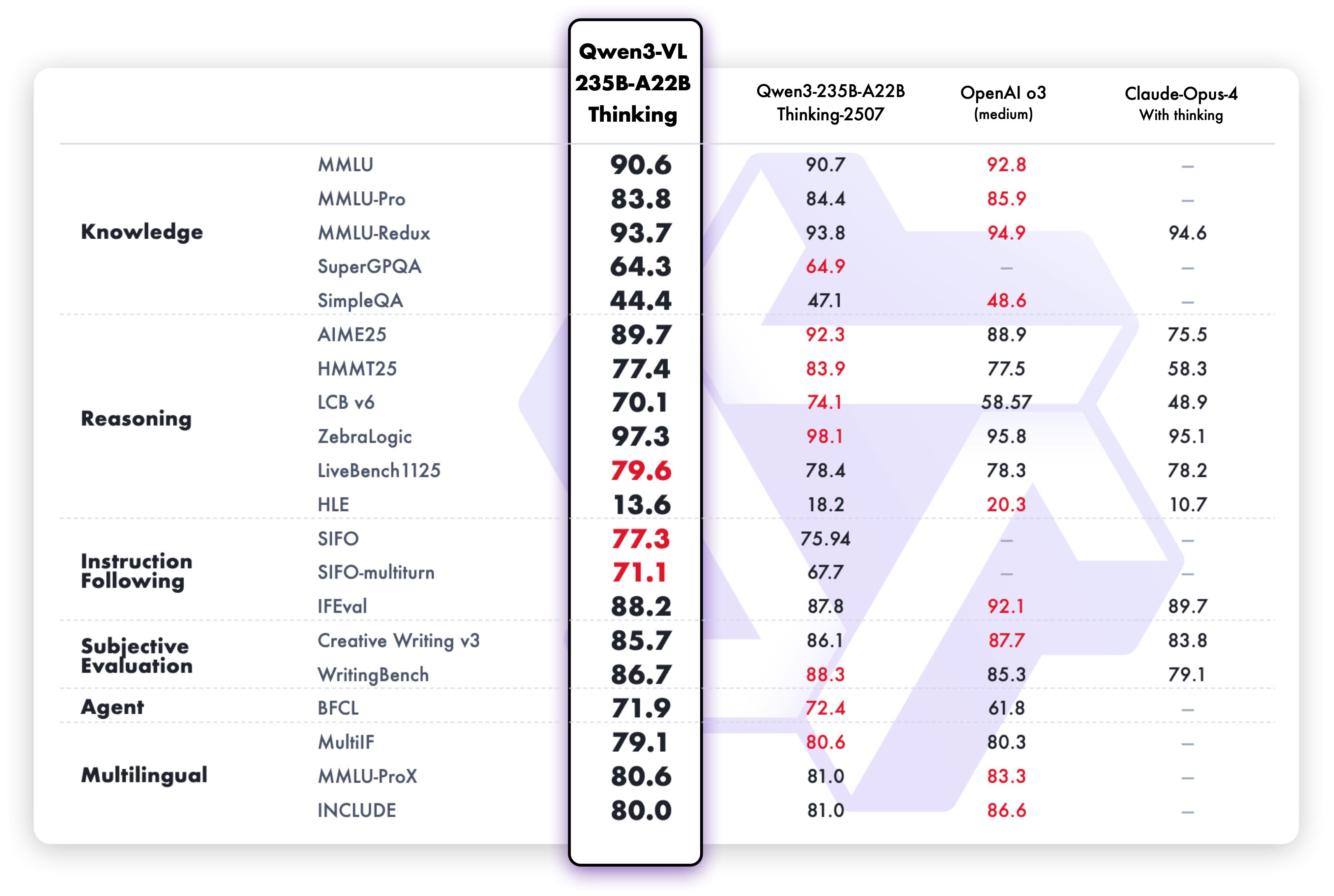

**Pure text performance**

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

## Quickstart

|

| 94 |

+

|

| 95 |

+

Currently, 🤗 Transformers does not support loading these weights directly. Stay tuned!

|

| 96 |

+

|

| 97 |

+

We recommend deploying the model using vLLM or SGLang, with example launch commands provided below. For details on the runtime environment and deployment, please refer to this [link](https://github.com/QwenLM/Qwen3-VL?tab=readme-ov-file#deployment).

|

| 98 |

+

|

| 99 |

+

### vLLM Inference

|

| 100 |

+

|

| 101 |

+

Here we provide a code snippet demonstrating how to use vLLM to run inference with Qwen3-VL locally. For more details on efficient deployment with vLLM, please refer to the [community deployment guide](https://docs.vllm.ai/projects/recipes/en/latest/Qwen/Qwen3-VL.html).

|

| 102 |

+

|

| 103 |

+

```python

|

| 104 |

+

# -*- coding: utf-8 -*-

|

| 105 |

+

import torch

|

| 106 |

+

from qwen_vl_utils import process_vision_info

|

| 107 |

+

from transformers import AutoProcessor

|

| 108 |

+

from vllm import LLM, SamplingParams

|

| 109 |

+

|

| 110 |

+

import os

|

| 111 |

+

os.environ['VLLM_WORKER_MULTIPROC_METHOD'] = 'spawn'

|

| 112 |

+

|

| 113 |

+

def prepare_inputs_for_vllm(messages, processor):

|

| 114 |

+

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

|

| 115 |

+

# qwen_vl_utils 0.0.14+ reqired

|

| 116 |

+

image_inputs, video_inputs, video_kwargs = process_vision_info(

|

| 117 |

+

messages,

|

| 118 |

+

image_patch_size=processor.image_processor.patch_size,

|

| 119 |

+

return_video_kwargs=True,

|

| 120 |

+

return_video_metadata=True

|

| 121 |

+

)

|

| 122 |

+

print(f"video_kwargs: {video_kwargs}")

|

| 123 |

+

|

| 124 |

+

mm_data = {}

|

| 125 |

+

if image_inputs is not None:

|

| 126 |

+

mm_data['image'] = image_inputs

|

| 127 |

+

if video_inputs is not None:

|

| 128 |

+

mm_data['video'] = video_inputs

|

| 129 |

+

|

| 130 |

+

return {

|

| 131 |

+

'prompt': text,

|

| 132 |

+

'multi_modal_data': mm_data,

|

| 133 |

+

'mm_processor_kwargs': video_kwargs

|

| 134 |

+

}

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

if __name__ == '__main__':

|

| 138 |

+

# messages = [

|

| 139 |

+

# {

|

| 140 |

+

# "role": "user",

|

| 141 |

+

# "content": [

|

| 142 |

+

# {

|

| 143 |

+

# "type": "video",

|

| 144 |

+

# "video": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen2-VL/space_woaudio.mp4",

|

| 145 |

+

# },

|

| 146 |

+

# {"type": "text", "text": "这段视频有多长"},

|

| 147 |

+

# ],

|

| 148 |

+

# }

|

| 149 |

+

# ]

|

| 150 |

+

|

| 151 |

+

messages = [

|

| 152 |

+

{

|

| 153 |

+

"role": "user",

|

| 154 |

+

"content": [

|

| 155 |

+

{

|

| 156 |

+

"type": "image",

|

| 157 |

+

"image": "https://ofasys-multimodal-wlcb-3-toshanghai.oss-accelerate.aliyuncs.com/wpf272043/keepme/image/receipt.png",

|

| 158 |

+

},

|

| 159 |

+

{"type": "text", "text": "Read all the text in the image."},

|

| 160 |

+

],

|

| 161 |

+

}

|

| 162 |

+

]

|

| 163 |

+

|

| 164 |

+

# TODO: change to your own checkpoint path

|

| 165 |

+

checkpoint_path = "Qwen/Qwen3-VL-235B-A22B-Thinking-FP8"

|

| 166 |

+

processor = AutoProcessor.from_pretrained(checkpoint_path)

|

| 167 |

+

inputs = [prepare_inputs_for_vllm(message, processor) for message in [messages]]

|

| 168 |

+

|

| 169 |

+

llm = LLM(

|

| 170 |

+

model=checkpoint_path,

|

| 171 |

+

trust_remote_code=True,

|

| 172 |

+

gpu_memory_utilization=0.70,

|

| 173 |

+

enforce_eager=False,

|

| 174 |

+

tensor_parallel_size=torch.cuda.device_count(),

|

| 175 |

+

seed=0

|

| 176 |

+

)

|

| 177 |

+

|

| 178 |

+

sampling_params = SamplingParams(

|

| 179 |

+

temperature=0,

|

| 180 |

+

max_tokens=1024,

|

| 181 |

+

top_k=-1,

|

| 182 |

+

stop_token_ids=[],

|

| 183 |

+

)

|

| 184 |

+

|

| 185 |

+

for i, input_ in enumerate(inputs):

|

| 186 |

+

print()

|

| 187 |

+

print('=' * 40)

|

| 188 |

+

print(f"Inputs[{i}]: {input_['prompt']=!r}")

|

| 189 |

+

print('\n' + '>' * 40)

|

| 190 |

+

|

| 191 |

+

outputs = llm.generate(inputs, sampling_params=sampling_params)

|

| 192 |

+

for i, output in enumerate(outputs):

|

| 193 |

+

generated_text = output.outputs[0].text

|

| 194 |

+

print()

|

| 195 |

+

print('=' * 40)

|

| 196 |

+

print(f"Generated text: {generated_text!r}")

|

| 197 |

+

```

|

| 198 |

+

|

| 199 |

+

### SGLang Inference

|

| 200 |

+

|

| 201 |

+

Here we provide a code snippet demonstrating how to use SGLang to run inference with Qwen3-VL locally.

|

| 202 |

+

|

| 203 |

+

```python

|

| 204 |

+

import time

|

| 205 |

+

from PIL import Image

|

| 206 |

+

from sglang import Engine

|

| 207 |

+

from qwen_vl_utils import process_vision_info

|

| 208 |

+

from transformers import AutoProcessor, AutoConfig

|

| 209 |

+

|

| 210 |

+

if __name__ == "__main__":

|

| 211 |

+

# TODO: change to your own checkpoint path

|

| 212 |

+

checkpoint_path = "Qwen/Qwen3-VL-235B-A22B-Thinking-FP8"

|

| 213 |

+

processor = AutoProcessor.from_pretrained(checkpoint_path)

|

| 214 |

+

|

| 215 |

+

messages = [

|

| 216 |

+

{

|

| 217 |

+

"role": "user",

|

| 218 |

+

"content": [

|

| 219 |

+

{

|

| 220 |

+

"type": "image",

|

| 221 |

+

"image": "https://ofasys-multimodal-wlcb-3-toshanghai.oss-accelerate.aliyuncs.com/wpf272043/keepme/image/receipt.png",

|

| 222 |

+

},

|

| 223 |

+

{"type": "text", "text": "Read all the text in the image."},

|

| 224 |

+

],

|

| 225 |

+

}

|

| 226 |

+

]

|

| 227 |

+

|

| 228 |

+

text = processor.apply_chat_template(

|

| 229 |

+

messages,

|

| 230 |

+

tokenize=False,

|

| 231 |

+

add_generation_prompt=True

|

| 232 |

+

)

|

| 233 |

+

|

| 234 |

+

image_inputs, _ = process_vision_info(messages, image_patch_size=processor.image_processor.patch_size)

|

| 235 |

+

|

| 236 |

+

llm = Engine(

|

| 237 |

+

model_path=checkpoint_path,

|

| 238 |

+

enable_multimodal=True,

|

| 239 |

+

mem_fraction_static=0.8,

|

| 240 |

+

tp_size=torch.cuda.device_count(),

|

| 241 |

+

attention_backend="fa3"

|

| 242 |

+

)

|

| 243 |

+

|

| 244 |

+

start = time.time()

|

| 245 |

+

sampling_params = {"max_new_tokens": 1024}

|

| 246 |

+

response = llm.generate(prompt=text, image_data=image_inputs, sampling_params=sampling_params)

|

| 247 |

+

print(f"Response costs: {time.time() - start:.2f}s")

|

| 248 |

+

print(f"Generated text: {response['text']}")

|

| 249 |

+

```

|

| 250 |

+

|

| 251 |

+

## Citation

|

| 252 |

+

|

| 253 |

+

If you find our work helpful, feel free to give us a cite.

|

| 254 |

+

|

| 255 |

+

```

|

| 256 |

+

@misc{qwen3technicalreport,

|

| 257 |

+

title={Qwen3 Technical Report},

|

| 258 |

+

author={Qwen Team},

|

| 259 |

+

year={2025},

|

| 260 |

+

eprint={2505.09388},

|

| 261 |

+

archivePrefix={arXiv},

|

| 262 |

+

primaryClass={cs.CL},

|

| 263 |

+

url={https://arxiv.org/abs/2505.09388},

|

| 264 |

+

}

|

| 265 |

+

|

| 266 |

+

@article{Qwen2.5-VL,

|

| 267 |

+

title={Qwen2.5-VL Technical Report},

|

| 268 |

+

author={Bai, Shuai and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Song, Sibo and Dang, Kai and Wang, Peng and Wang, Shijie and Tang, Jun and Zhong, Humen and Zhu, Yuanzhi and Yang, Mingkun and Li, Zhaohai and Wan, Jianqiang and Wang, Pengfei and Ding, Wei and Fu, Zheren and Xu, Yiheng and Ye, Jiabo and Zhang, Xi and Xie, Tianbao and Cheng, Zesen and Zhang, Hang and Yang, Zhibo and Xu, Haiyang and Lin, Junyang},

|

| 269 |

+

journal={arXiv preprint arXiv:2502.13923},

|

| 270 |

+

year={2025}

|

| 271 |

+

}

|

| 272 |

+

|

| 273 |

+

@article{Qwen2VL,

|

| 274 |

+

title={Qwen2-VL: Enhancing Vision-Language Model's Perception of the World at Any Resolution},

|

| 275 |

+

author={Wang, Peng and Bai, Shuai and Tan, Sinan and Wang, Shijie and Fan, Zhihao and Bai, Jinze and Chen, Keqin and Liu, Xuejing and Wang, Jialin and Ge, Wenbin and Fan, Yang and Dang, Kai and Du, Mengfei and Ren, Xuancheng and Men, Rui and Liu, Dayiheng and Zhou, Chang and Zhou, Jingren and Lin, Junyang},

|

| 276 |

+

journal={arXiv preprint arXiv:2409.12191},

|

| 277 |

+

year={2024}

|

| 278 |

+

}

|

| 279 |

+

|

| 280 |

+

@article{Qwen-VL,

|

| 281 |

+

title={Qwen-VL: A Versatile Vision-Language Model for Understanding, Localization, Text Reading, and Beyond},

|

| 282 |

+

author={Bai, Jinze and Bai, Shuai and Yang, Shusheng and Wang, Shijie and Tan, Sinan and Wang, Peng and Lin, Junyang and Zhou, Chang and Zhou, Jingren},

|

| 283 |

+

journal={arXiv preprint arXiv:2308.12966},

|

| 284 |

+

year={2023}

|

| 285 |

+

}

|

| 286 |

+

```

|

added_tokens.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</think>": 151668,

|

| 3 |

+

"</tool_call>": 151658,

|

| 4 |

+

"</tool_response>": 151666,

|

| 5 |

+

"<think>": 151667,

|

| 6 |

+

"<tool_call>": 151657,

|

| 7 |

+

"<tool_response>": 151665,

|

| 8 |

+

"<|box_end|>": 151649,

|

| 9 |

+

"<|box_start|>": 151648,

|

| 10 |

+

"<|endoftext|>": 151643,

|

| 11 |

+

"<|file_sep|>": 151664,

|

| 12 |

+

"<|fim_middle|>": 151660,

|

| 13 |

+

"<|fim_pad|>": 151662,

|

| 14 |

+

"<|fim_prefix|>": 151659,

|

| 15 |

+

"<|fim_suffix|>": 151661,

|

| 16 |

+

"<|im_end|>": 151645,

|

| 17 |

+

"<|im_start|>": 151644,

|

| 18 |

+

"<|image_pad|>": 151655,

|

| 19 |

+

"<|object_ref_end|>": 151647,

|

| 20 |

+

"<|object_ref_start|>": 151646,

|

| 21 |

+

"<|quad_end|>": 151651,

|

| 22 |

+

"<|quad_start|>": 151650,

|

| 23 |

+

"<|repo_name|>": 151663,

|

| 24 |

+

"<|video_pad|>": 151656,

|

| 25 |

+

"<|vision_end|>": 151653,

|

| 26 |

+

"<|vision_pad|>": 151654,

|

| 27 |

+

"<|vision_start|>": 151652

|

| 28 |

+

}

|

chat_template.jinja

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{%- set image_count = namespace(value=0) %}

|

| 2 |

+

{%- set video_count = namespace(value=0) %}

|

| 3 |

+

{%- macro render_content(content, do_vision_count) %}

|

| 4 |

+

{%- if content is string %}

|

| 5 |

+

{{- content }}

|

| 6 |

+

{%- else %}

|

| 7 |

+

{%- for item in content %}

|

| 8 |

+

{%- if 'image' in item or 'image_url' in item or item.type == 'image' %}

|

| 9 |

+

{%- if do_vision_count %}

|

| 10 |

+

{%- set image_count.value = image_count.value + 1 %}

|

| 11 |

+

{%- endif %}

|

| 12 |

+

{%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}

|

| 13 |

+

<|vision_start|><|image_pad|><|vision_end|>

|

| 14 |

+

{%- elif 'video' in item or item.type == 'video' %}

|

| 15 |

+

{%- if do_vision_count %}

|

| 16 |

+

{%- set video_count.value = video_count.value + 1 %}

|

| 17 |

+

{%- endif %}

|

| 18 |

+

{%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}

|

| 19 |

+

<|vision_start|><|video_pad|><|vision_end|>

|

| 20 |

+

{%- elif 'text' in item %}

|

| 21 |

+

{{- item.text }}

|

| 22 |

+

{%- endif %}

|

| 23 |

+

{%- endfor %}

|

| 24 |

+

{%- endif %}

|

| 25 |

+

{%- endmacro %}

|

| 26 |

+

{%- if tools %}

|

| 27 |

+

{{- '<|im_start|>system\n' }}

|

| 28 |

+

{%- if messages[0].role == 'system' %}

|

| 29 |

+

{{- render_content(messages[0].content, false) + '\n\n' }}

|

| 30 |

+

{%- endif %}

|

| 31 |

+

{{- "# Tools\n\nYou may call one or more functions to assist with the user query.\n\nYou are provided with function signatures within <tools></tools> XML tags:\n<tools>" }}

|

| 32 |

+

{%- for tool in tools %}

|

| 33 |

+

{{- "\n" }}

|

| 34 |

+

{{- tool | tojson }}

|

| 35 |

+

{%- endfor %}

|

| 36 |

+

{{- "\n</tools>\n\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\n<tool_call>\n{\"name\": <function-name>, \"arguments\": <args-json-object>}\n</tool_call><|im_end|>\n" }}

|

| 37 |

+

{%- else %}

|

| 38 |

+

{%- if messages[0].role == 'system' %}

|

| 39 |

+

{{- '<|im_start|>system\n' + render_content(messages[0].content, false) + '<|im_end|>\n' }}

|

| 40 |

+

{%- endif %}

|

| 41 |

+

{%- endif %}

|

| 42 |

+

{%- set ns = namespace(multi_step_tool=true, last_query_index=messages|length - 1) %}

|

| 43 |

+

{%- for message in messages[::-1] %}

|

| 44 |

+

{%- set index = (messages|length - 1) - loop.index0 %}

|

| 45 |

+

{%- if ns.multi_step_tool and message.role == "user" %}

|

| 46 |

+

{%- set content = render_content(message.content, false) %}

|

| 47 |

+

{%- if not(content.startswith('<tool_response>') and content.endswith('</tool_response>')) %}

|

| 48 |

+

{%- set ns.multi_step_tool = false %}

|

| 49 |

+

{%- set ns.last_query_index = index %}

|

| 50 |

+

{%- endif %}

|

| 51 |

+

{%- endif %}

|

| 52 |

+

{%- endfor %}

|

| 53 |

+

{%- for message in messages %}

|

| 54 |

+

{%- set content = render_content(message.content, True) %}

|

| 55 |

+

{%- if (message.role == "user") or (message.role == "system" and not loop.first) %}

|

| 56 |

+

{{- '<|im_start|>' + message.role + '\n' + content + '<|im_end|>' + '\n' }}

|

| 57 |

+

{%- elif message.role == "assistant" %}

|

| 58 |

+

{%- set reasoning_content = '' %}

|

| 59 |

+

{%- if message.reasoning_content is string %}

|

| 60 |

+

{%- set reasoning_content = message.reasoning_content %}

|

| 61 |

+

{%- else %}

|

| 62 |

+

{%- if '</think>' in content %}

|

| 63 |

+

{%- set reasoning_content = content.split('</think>')[0].rstrip('\n').split('<think>')[-1].lstrip('\n') %}

|

| 64 |

+

{%- set content = content.split('</think>')[-1].lstrip('\n') %}

|

| 65 |

+

{%- endif %}

|

| 66 |

+

{%- endif %}

|

| 67 |

+

{%- if loop.index0 > ns.last_query_index %}

|

| 68 |

+

{%- if loop.last or (not loop.last and reasoning_content) %}

|

| 69 |

+

{{- '<|im_start|>' + message.role + '\n<think>\n' + reasoning_content.strip('\n') + '\n</think>\n\n' + content.lstrip('\n') }}

|

| 70 |

+

{%- else %}

|

| 71 |

+

{{- '<|im_start|>' + message.role + '\n' + content }}

|

| 72 |

+

{%- endif %}

|

| 73 |

+

{%- else %}

|

| 74 |

+

{{- '<|im_start|>' + message.role + '\n' + content }}

|

| 75 |

+

{%- endif %}

|

| 76 |

+

{%- if message.tool_calls %}

|

| 77 |

+

{%- for tool_call in message.tool_calls %}

|

| 78 |

+

{%- if (loop.first and content) or (not loop.first) %}

|

| 79 |

+

{{- '\n' }}

|

| 80 |

+

{%- endif %}

|

| 81 |

+

{%- if tool_call.function %}

|

| 82 |

+

{%- set tool_call = tool_call.function %}

|

| 83 |

+

{%- endif %}

|

| 84 |

+

{{- '<tool_call>\n{"name": "' }}

|

| 85 |

+

{{- tool_call.name }}

|

| 86 |

+

{{- '", "arguments": ' }}

|

| 87 |

+

{%- if tool_call.arguments is string %}

|

| 88 |

+

{{- tool_call.arguments }}

|

| 89 |

+

{%- else %}

|

| 90 |

+

{{- tool_call.arguments | tojson }}

|

| 91 |

+

{%- endif %}

|

| 92 |

+

{{- '}\n</tool_call>' }}

|

| 93 |

+

{%- endfor %}

|

| 94 |

+

{%- endif %}

|

| 95 |

+

{{- '<|im_end|>\n' }}

|

| 96 |

+

{%- elif message.role == "tool" %}

|

| 97 |

+

{%- if loop.first or (messages[loop.index0 - 1].role != "tool") %}

|

| 98 |

+

{{- '<|im_start|>user' }}

|

| 99 |

+

{%- endif %}

|

| 100 |

+

{{- '\n<tool_response>\n' }}

|

| 101 |

+

{{- content }}

|

| 102 |

+

{{- '\n</tool_response>' }}

|

| 103 |

+

{%- if loop.last or (messages[loop.index0 + 1].role != "tool") %}

|

| 104 |

+

{{- '<|im_end|>\n' }}

|

| 105 |

+

{%- endif %}

|

| 106 |

+

{%- endif %}

|

| 107 |

+

{%- endfor %}

|

| 108 |

+

{%- if add_generation_prompt %}

|

| 109 |

+

{{- '<|im_start|>assistant\n<think>\n' }}

|

| 110 |

+

{%- endif %}

|

chat_template.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chat_template": "{%- set image_count = namespace(value=0) %}\n{%- set video_count = namespace(value=0) %}\n{%- macro render_content(content, do_vision_count) %}\n {%- if content is string %}\n {{- content }}\n {%- else %}\n {%- for item in content %}\n {%- if 'image' in item or 'image_url' in item or item.type == 'image' %}\n {%- if do_vision_count %}\n {%- set image_count.value = image_count.value + 1 %}\n {%- endif %}\n {%- if add_vision_id %}Picture {{ image_count.value }}: {% endif -%}\n <|vision_start|><|image_pad|><|vision_end|>\n {%- elif 'video' in item or item.type == 'video' %}\n {%- if do_vision_count %}\n {%- set video_count.value = video_count.value + 1 %}\n {%- endif %}\n {%- if add_vision_id %}Video {{ video_count.value }}: {% endif -%}\n <|vision_start|><|video_pad|><|vision_end|>\n {%- elif 'text' in item %}\n {{- item.text }}\n {%- endif %}\n {%- endfor %}\n {%- endif %}\n{%- endmacro %}\n{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0].role == 'system' %}\n {{- render_content(messages[0].content, false) + '\\n\\n' }}\n {%- endif %}\n {{- \"# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0].role == 'system' %}\n {{- '<|im_start|>system\\n' + render_content(messages[0].content, false) + '<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- set ns = namespace(multi_step_tool=true, last_query_index=messages|length - 1) %}\n{%- for message in messages[::-1] %}\n {%- set index = (messages|length - 1) - loop.index0 %}\n {%- if ns.multi_step_tool and message.role == \"user\" %}\n {%- set content = render_content(message.content, false) %}\n {%- if not(content.startswith('<tool_response>') and content.endswith('</tool_response>')) %}\n {%- set ns.multi_step_tool = false %}\n {%- set ns.last_query_index = index %}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- for message in messages %}\n {%- set content = render_content(message.content, True) %}\n {%- if (message.role == \"user\") or (message.role == \"system\" and not loop.first) %}\n {{- '<|im_start|>' + message.role + '\\n' + content + '<|im_end|>' + '\\n' }}\n {%- elif message.role == \"assistant\" %}\n {%- set reasoning_content = '' %}\n {%- if message.reasoning_content is string %}\n {%- set reasoning_content = message.reasoning_content %}\n {%- else %}\n {%- if '</think>' in content %}\n {%- set reasoning_content = content.split('</think>')[0].rstrip('\\n').split('<think>')[-1].lstrip('\\n') %}\n {%- set content = content.split('</think>')[-1].lstrip('\\n') %}\n {%- endif %}\n {%- endif %}\n {%- if loop.index0 > ns.last_query_index %}\n {%- if loop.last or (not loop.last and reasoning_content) %}\n {{- '<|im_start|>' + message.role + '\\n<think>\\n' + reasoning_content.strip('\\n') + '\\n</think>\\n\\n' + content.lstrip('\\n') }}\n {%- else %}\n {{- '<|im_start|>' + message.role + '\\n' + content }}\n {%- endif %}\n {%- else %}\n {{- '<|im_start|>' + message.role + '\\n' + content }}\n {%- endif %}\n {%- if message.tool_calls %}\n {%- for tool_call in message.tool_calls %}\n {%- if (loop.first and content) or (not loop.first) %}\n {{- '\\n' }}\n {%- endif %}\n {%- if tool_call.function %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {%- if tool_call.arguments is string %}\n {{- tool_call.arguments }}\n {%- else %}\n {{- tool_call.arguments | tojson }}\n {%- endif %}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {%- endif %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if loop.first or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {{- content }}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n<think>\\n' }}\n{%- endif %}\n"

|

| 3 |

+

}

|

config.json

ADDED

|

@@ -0,0 +1,421 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"Qwen3VLMoeForConditionalGeneration"

|

| 4 |

+

],

|

| 5 |

+

"image_token_id": 151655,

|

| 6 |

+

"model_type": "qwen3_vl_moe",

|

| 7 |

+

"pad_token_id": 151654,

|

| 8 |

+

"quantization_config": {

|

| 9 |

+

"activation_scheme": "dynamic",

|

| 10 |

+

"fmt": "e4m3",

|

| 11 |

+

"ignored_layers": [

|

| 12 |

+

"lm_head",

|

| 13 |

+

"model.visual.merger.linear_fc1",

|

| 14 |

+

"model.visual.merger.linear_fc2",

|

| 15 |

+

"model.visual.merger.norm",

|

| 16 |

+

"model.visual.patch_embed.proj",

|

| 17 |

+

"model.visual.pos_embed",

|

| 18 |

+

"visual.merger.linear_fc1",

|

| 19 |

+

"visual.merger.linear_fc2",

|

| 20 |

+

"visual.merger.norm",

|

| 21 |

+

"visual.patch_embed.proj",

|

| 22 |

+

"visual.pos_embed",

|

| 23 |

+

"model.visual.blocks.0.attn.proj",

|

| 24 |

+

"model.visual.blocks.0.attn.qkv",

|

| 25 |

+

"model.visual.blocks.0.mlp.linear_fc1",

|

| 26 |

+

"model.visual.blocks.0.mlp.linear_fc2",

|

| 27 |

+

"visual.blocks.0.attn.proj",

|

| 28 |

+

"visual.blocks.0.attn.qkv_proj",

|

| 29 |

+

"visual.blocks.0.mlp.linear_fc1",

|

| 30 |

+

"visual.blocks.0.mlp.linear_fc2",

|

| 31 |

+

"model.visual.blocks.1.attn.proj",

|

| 32 |

+

"model.visual.blocks.1.attn.qkv",

|

| 33 |

+

"model.visual.blocks.1.mlp.linear_fc1",

|

| 34 |

+

"model.visual.blocks.1.mlp.linear_fc2",

|

| 35 |

+

"visual.blocks.1.attn.proj",

|

| 36 |

+

"visual.blocks.1.attn.qkv_proj",

|

| 37 |

+

"visual.blocks.1.mlp.linear_fc1",

|

| 38 |

+

"visual.blocks.1.mlp.linear_fc2",

|

| 39 |

+

"model.visual.blocks.2.attn.proj",

|

| 40 |

+

"model.visual.blocks.2.attn.qkv",

|

| 41 |

+

"model.visual.blocks.2.mlp.linear_fc1",

|

| 42 |

+

"model.visual.blocks.2.mlp.linear_fc2",

|

| 43 |

+

"visual.blocks.2.attn.proj",

|

| 44 |

+

"visual.blocks.2.attn.qkv_proj",

|

| 45 |

+

"visual.blocks.2.mlp.linear_fc1",

|

| 46 |

+

"visual.blocks.2.mlp.linear_fc2",

|

| 47 |

+

"model.visual.blocks.3.attn.proj",

|

| 48 |

+

"model.visual.blocks.3.attn.qkv",

|

| 49 |

+

"model.visual.blocks.3.mlp.linear_fc1",

|

| 50 |

+

"model.visual.blocks.3.mlp.linear_fc2",

|

| 51 |

+

"visual.blocks.3.attn.proj",

|

| 52 |

+

"visual.blocks.3.attn.qkv_proj",

|

| 53 |

+

"visual.blocks.3.mlp.linear_fc1",

|

| 54 |

+

"visual.blocks.3.mlp.linear_fc2",

|

| 55 |

+

"model.visual.blocks.4.attn.proj",

|

| 56 |

+

"model.visual.blocks.4.attn.qkv",

|

| 57 |

+

"model.visual.blocks.4.mlp.linear_fc1",

|

| 58 |

+

"model.visual.blocks.4.mlp.linear_fc2",

|

| 59 |

+

"visual.blocks.4.attn.proj",

|

| 60 |

+

"visual.blocks.4.attn.qkv_proj",

|

| 61 |

+

"visual.blocks.4.mlp.linear_fc1",

|

| 62 |

+

"visual.blocks.4.mlp.linear_fc2",

|

| 63 |

+

"model.visual.blocks.5.attn.proj",

|

| 64 |

+

"model.visual.blocks.5.attn.qkv",

|

| 65 |

+

"model.visual.blocks.5.mlp.linear_fc1",

|

| 66 |

+

"model.visual.blocks.5.mlp.linear_fc2",

|

| 67 |

+

"visual.blocks.5.attn.proj",

|

| 68 |

+

"visual.blocks.5.attn.qkv_proj",

|

| 69 |

+

"visual.blocks.5.mlp.linear_fc1",

|

| 70 |

+

"visual.blocks.5.mlp.linear_fc2",

|

| 71 |

+

"model.visual.blocks.6.attn.proj",

|

| 72 |

+

"model.visual.blocks.6.attn.qkv",

|

| 73 |

+

"model.visual.blocks.6.mlp.linear_fc1",

|

| 74 |

+

"model.visual.blocks.6.mlp.linear_fc2",

|

| 75 |

+

"visual.blocks.6.attn.proj",

|

| 76 |

+

"visual.blocks.6.attn.qkv_proj",

|

| 77 |

+

"visual.blocks.6.mlp.linear_fc1",

|

| 78 |

+

"visual.blocks.6.mlp.linear_fc2",

|

| 79 |

+

"model.visual.blocks.7.attn.proj",

|

| 80 |

+

"model.visual.blocks.7.attn.qkv",

|

| 81 |

+

"model.visual.blocks.7.mlp.linear_fc1",

|

| 82 |

+

"model.visual.blocks.7.mlp.linear_fc2",

|

| 83 |

+

"visual.blocks.7.attn.proj",

|

| 84 |

+

"visual.blocks.7.attn.qkv_proj",

|

| 85 |

+

"visual.blocks.7.mlp.linear_fc1",

|

| 86 |

+

"visual.blocks.7.mlp.linear_fc2",

|

| 87 |

+

"model.visual.blocks.8.attn.proj",

|

| 88 |

+

"model.visual.blocks.8.attn.qkv",

|

| 89 |

+

"model.visual.blocks.8.mlp.linear_fc1",

|

| 90 |

+

"model.visual.blocks.8.mlp.linear_fc2",

|

| 91 |

+

"visual.blocks.8.attn.proj",

|

| 92 |

+

"visual.blocks.8.attn.qkv_proj",

|

| 93 |

+

"visual.blocks.8.mlp.linear_fc1",

|

| 94 |

+

"visual.blocks.8.mlp.linear_fc2",

|

| 95 |

+

"model.visual.blocks.9.attn.proj",

|

| 96 |

+

"model.visual.blocks.9.attn.qkv",

|

| 97 |

+

"model.visual.blocks.9.mlp.linear_fc1",

|

| 98 |

+

"model.visual.blocks.9.mlp.linear_fc2",

|

| 99 |

+

"visual.blocks.9.attn.proj",

|

| 100 |

+

"visual.blocks.9.attn.qkv_proj",

|

| 101 |

+

"visual.blocks.9.mlp.linear_fc1",

|

| 102 |

+

"visual.blocks.9.mlp.linear_fc2",

|

| 103 |

+

"model.visual.blocks.10.attn.proj",

|

| 104 |

+

"model.visual.blocks.10.attn.qkv",

|

| 105 |

+

"model.visual.blocks.10.mlp.linear_fc1",

|

| 106 |

+

"model.visual.blocks.10.mlp.linear_fc2",

|

| 107 |

+

"visual.blocks.10.attn.proj",

|

| 108 |

+

"visual.blocks.10.attn.qkv_proj",

|

| 109 |

+

"visual.blocks.10.mlp.linear_fc1",

|

| 110 |

+

"visual.blocks.10.mlp.linear_fc2",

|

| 111 |

+

"model.visual.blocks.11.attn.proj",

|

| 112 |

+

"model.visual.blocks.11.attn.qkv",

|

| 113 |

+

"model.visual.blocks.11.mlp.linear_fc1",

|

| 114 |

+

"model.visual.blocks.11.mlp.linear_fc2",

|

| 115 |

+

"visual.blocks.11.attn.proj",

|

| 116 |

+

"visual.blocks.11.attn.qkv_proj",

|

| 117 |

+

"visual.blocks.11.mlp.linear_fc1",

|

| 118 |

+

"visual.blocks.11.mlp.linear_fc2",

|

| 119 |

+

"model.visual.blocks.12.attn.proj",

|

| 120 |

+

"model.visual.blocks.12.attn.qkv",

|

| 121 |

+

"model.visual.blocks.12.mlp.linear_fc1",

|

| 122 |

+

"model.visual.blocks.12.mlp.linear_fc2",

|

| 123 |

+

"visual.blocks.12.attn.proj",

|

| 124 |

+

"visual.blocks.12.attn.qkv_proj",

|

| 125 |

+

"visual.blocks.12.mlp.linear_fc1",

|

| 126 |

+

"visual.blocks.12.mlp.linear_fc2",

|

| 127 |

+

"model.visual.blocks.13.attn.proj",

|

| 128 |

+

"model.visual.blocks.13.attn.qkv",

|

| 129 |

+

"model.visual.blocks.13.mlp.linear_fc1",

|

| 130 |

+

"model.visual.blocks.13.mlp.linear_fc2",

|

| 131 |

+

"visual.blocks.13.attn.proj",

|

| 132 |

+

"visual.blocks.13.attn.qkv_proj",

|

| 133 |

+

"visual.blocks.13.mlp.linear_fc1",

|

| 134 |

+

"visual.blocks.13.mlp.linear_fc2",

|

| 135 |

+

"model.visual.blocks.14.attn.proj",

|

| 136 |

+

"model.visual.blocks.14.attn.qkv",

|

| 137 |

+

"model.visual.blocks.14.mlp.linear_fc1",

|

| 138 |

+

"model.visual.blocks.14.mlp.linear_fc2",

|

| 139 |

+

"visual.blocks.14.attn.proj",

|

| 140 |

+

"visual.blocks.14.attn.qkv_proj",

|

| 141 |

+

"visual.blocks.14.mlp.linear_fc1",

|

| 142 |

+

"visual.blocks.14.mlp.linear_fc2",

|

| 143 |

+

"model.visual.blocks.15.attn.proj",

|

| 144 |

+

"model.visual.blocks.15.attn.qkv",

|

| 145 |

+

"model.visual.blocks.15.mlp.linear_fc1",

|

| 146 |

+

"model.visual.blocks.15.mlp.linear_fc2",

|

| 147 |

+

"visual.blocks.15.attn.proj",

|

| 148 |

+

"visual.blocks.15.attn.qkv_proj",

|

| 149 |

+

"visual.blocks.15.mlp.linear_fc1",

|

| 150 |

+

"visual.blocks.15.mlp.linear_fc2",

|

| 151 |

+

"model.visual.blocks.16.attn.proj",

|

| 152 |

+

"model.visual.blocks.16.attn.qkv",

|

| 153 |

+

"model.visual.blocks.16.mlp.linear_fc1",

|

| 154 |

+

"model.visual.blocks.16.mlp.linear_fc2",

|

| 155 |

+

"visual.blocks.16.attn.proj",

|

| 156 |

+

"visual.blocks.16.attn.qkv_proj",

|

| 157 |

+

"visual.blocks.16.mlp.linear_fc1",

|

| 158 |

+

"visual.blocks.16.mlp.linear_fc2",

|

| 159 |

+

"model.visual.blocks.17.attn.proj",

|

| 160 |

+

"model.visual.blocks.17.attn.qkv",

|

| 161 |

+

"model.visual.blocks.17.mlp.linear_fc1",

|

| 162 |

+

"model.visual.blocks.17.mlp.linear_fc2",

|

| 163 |

+

"visual.blocks.17.attn.proj",

|

| 164 |

+

"visual.blocks.17.attn.qkv_proj",

|

| 165 |

+

"visual.blocks.17.mlp.linear_fc1",

|

| 166 |

+

"visual.blocks.17.mlp.linear_fc2",

|

| 167 |

+

"model.visual.blocks.18.attn.proj",

|

| 168 |

+

"model.visual.blocks.18.attn.qkv",

|

| 169 |

+

"model.visual.blocks.18.mlp.linear_fc1",

|

| 170 |

+

"model.visual.blocks.18.mlp.linear_fc2",

|

| 171 |

+

"visual.blocks.18.attn.proj",

|

| 172 |

+

"visual.blocks.18.attn.qkv_proj",

|

| 173 |

+

"visual.blocks.18.mlp.linear_fc1",

|

| 174 |

+

"visual.blocks.18.mlp.linear_fc2",

|

| 175 |

+

"model.visual.blocks.19.attn.proj",

|

| 176 |

+

"model.visual.blocks.19.attn.qkv",

|

| 177 |

+

"model.visual.blocks.19.mlp.linear_fc1",

|

| 178 |

+

"model.visual.blocks.19.mlp.linear_fc2",

|

| 179 |

+

"visual.blocks.19.attn.proj",

|

| 180 |

+

"visual.blocks.19.attn.qkv_proj",

|

| 181 |

+

"visual.blocks.19.mlp.linear_fc1",

|

| 182 |

+

"visual.blocks.19.mlp.linear_fc2",

|

| 183 |

+

"model.visual.blocks.20.attn.proj",

|

| 184 |

+

"model.visual.blocks.20.attn.qkv",

|

| 185 |

+

"model.visual.blocks.20.mlp.linear_fc1",

|

| 186 |

+

"model.visual.blocks.20.mlp.linear_fc2",

|

| 187 |

+

"visual.blocks.20.attn.proj",

|

| 188 |

+

"visual.blocks.20.attn.qkv_proj",

|

| 189 |

+

"visual.blocks.20.mlp.linear_fc1",

|

| 190 |

+

"visual.blocks.20.mlp.linear_fc2",

|

| 191 |

+

"model.visual.blocks.21.attn.proj",

|

| 192 |

+

"model.visual.blocks.21.attn.qkv",

|

| 193 |

+

"model.visual.blocks.21.mlp.linear_fc1",

|

| 194 |

+

"model.visual.blocks.21.mlp.linear_fc2",

|

| 195 |

+

"visual.blocks.21.attn.proj",

|

| 196 |

+

"visual.blocks.21.attn.qkv_proj",

|

| 197 |

+

"visual.blocks.21.mlp.linear_fc1",

|

| 198 |

+

"visual.blocks.21.mlp.linear_fc2",

|

| 199 |

+

"model.visual.blocks.22.attn.proj",

|

| 200 |

+

"model.visual.blocks.22.attn.qkv",

|

| 201 |

+

"model.visual.blocks.22.mlp.linear_fc1",

|

| 202 |

+

"model.visual.blocks.22.mlp.linear_fc2",

|

| 203 |

+

"visual.blocks.22.attn.proj",

|

| 204 |

+

"visual.blocks.22.attn.qkv_proj",

|

| 205 |

+

"visual.blocks.22.mlp.linear_fc1",

|

| 206 |

+

"visual.blocks.22.mlp.linear_fc2",

|

| 207 |

+

"model.visual.blocks.23.attn.proj",

|

| 208 |

+

"model.visual.blocks.23.attn.qkv",

|

| 209 |

+

"model.visual.blocks.23.mlp.linear_fc1",

|

| 210 |

+

"model.visual.blocks.23.mlp.linear_fc2",

|

| 211 |

+

"visual.blocks.23.attn.proj",

|

| 212 |

+

"visual.blocks.23.attn.qkv_proj",

|

| 213 |

+

"visual.blocks.23.mlp.linear_fc1",

|

| 214 |

+

"visual.blocks.23.mlp.linear_fc2",

|

| 215 |

+

"model.visual.blocks.24.attn.proj",

|

| 216 |

+

"model.visual.blocks.24.attn.qkv",

|

| 217 |

+

"model.visual.blocks.24.mlp.linear_fc1",

|

| 218 |

+

"model.visual.blocks.24.mlp.linear_fc2",

|

| 219 |

+

"visual.blocks.24.attn.proj",

|

| 220 |

+

"visual.blocks.24.attn.qkv_proj",

|

| 221 |

+

"visual.blocks.24.mlp.linear_fc1",

|

| 222 |

+

"visual.blocks.24.mlp.linear_fc2",

|

| 223 |

+

"model.visual.blocks.25.attn.proj",

|

| 224 |

+

"model.visual.blocks.25.attn.qkv",

|

| 225 |

+

"model.visual.blocks.25.mlp.linear_fc1",

|

| 226 |

+

"model.visual.blocks.25.mlp.linear_fc2",

|

| 227 |

+

"visual.blocks.25.attn.proj",

|

| 228 |

+

"visual.blocks.25.attn.qkv_proj",

|

| 229 |

+

"visual.blocks.25.mlp.linear_fc1",

|

| 230 |

+

"visual.blocks.25.mlp.linear_fc2",

|

| 231 |

+

"model.visual.blocks.26.attn.proj",

|

| 232 |

+

"model.visual.blocks.26.attn.qkv",

|

| 233 |

+

"model.visual.blocks.26.mlp.linear_fc1",

|

| 234 |

+

"model.visual.blocks.26.mlp.linear_fc2",

|

| 235 |

+

"visual.blocks.26.attn.proj",

|

| 236 |

+

"visual.blocks.26.attn.qkv_proj",

|

| 237 |

+

"visual.blocks.26.mlp.linear_fc1",

|

| 238 |

+

"visual.blocks.26.mlp.linear_fc2",

|

| 239 |

+

"model.visual.deepstack_merger_list.0.linear_fc1",

|

| 240 |

+

"model.visual.deepstack_merger_list.0.linear_fc2",

|

| 241 |

+

"model.visual.deepstack_merger_list.0.norm",

|

| 242 |

+

"visual.deepstack_merger_list.0.linear_fc1",

|

| 243 |

+

"visual.deepstack_merger_list.0.linear_fc2",

|

| 244 |

+

"visual.deepstack_merger_list.0.norm",

|

| 245 |

+

"model.visual.deepstack_merger_list.1.linear_fc1",

|

| 246 |

+

"model.visual.deepstack_merger_list.1.linear_fc2",

|

| 247 |

+

"model.visual.deepstack_merger_list.1.norm",

|

| 248 |

+

"visual.deepstack_merger_list.1.linear_fc1",

|

| 249 |

+

"visual.deepstack_merger_list.1.linear_fc2",

|

| 250 |

+

"visual.deepstack_merger_list.1.norm",

|

| 251 |

+

"model.visual.deepstack_merger_list.2.linear_fc1",

|

| 252 |

+

"model.visual.deepstack_merger_list.2.linear_fc2",

|

| 253 |

+

"model.visual.deepstack_merger_list.2.norm",

|

| 254 |

+

"visual.deepstack_merger_list.2.linear_fc1",

|

| 255 |

+

"visual.deepstack_merger_list.2.linear_fc2",

|

| 256 |

+

"visual.deepstack_merger_list.2.norm",

|

| 257 |

+

"model.language_model.layers.0.mlp.gate",

|

| 258 |

+

"model.language_model.layers.1.mlp.gate",

|

| 259 |

+

"model.language_model.layers.2.mlp.gate",

|

| 260 |

+

"model.language_model.layers.3.mlp.gate",

|

| 261 |

+

"model.language_model.layers.4.mlp.gate",

|

| 262 |

+

"model.language_model.layers.5.mlp.gate",

|

| 263 |

+

"model.language_model.layers.6.mlp.gate",

|

| 264 |

+