Datasets:

The dataset viewer is not available for this split.

Cannot extract the features (columns) for the split 'train' of the config 'default' of the dataset.

Error code: FeaturesError

Exception: ArrowInvalid

Message: Schema at index 1 was different:

image_path: list<item: string>

depth_path: list<item: string>

normal_path: list<item: string>

vs

image_path: list<item: string>

depth_path: list<item: string>

Traceback: Traceback (most recent call last):

File "/src/services/worker/src/worker/job_runners/split/first_rows.py", line 228, in compute_first_rows_from_streaming_response

iterable_dataset = iterable_dataset._resolve_features()

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 3496, in _resolve_features

features = _infer_features_from_batch(self.with_format(None)._head())

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 2257, in _head

return next(iter(self.iter(batch_size=n)))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 2461, in iter

for key, example in iterator:

^^^^^^^^

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 1952, in __iter__

for key, pa_table in self._iter_arrow():

^^^^^^^^^^^^^^^^^^

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 1974, in _iter_arrow

yield from self.ex_iterable._iter_arrow()

File "/src/services/worker/.venv/lib/python3.12/site-packages/datasets/iterable_dataset.py", line 531, in _iter_arrow

yield new_key, pa.Table.from_batches(chunks_buffer)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "pyarrow/table.pxi", line 5039, in pyarrow.lib.Table.from_batches

File "pyarrow/error.pxi", line 155, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 92, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: Schema at index 1 was different:

image_path: list<item: string>

depth_path: list<item: string>

normal_path: list<item: string>

vs

image_path: list<item: string>

depth_path: list<item: string>Need help to make the dataset viewer work? Make sure to review how to configure the dataset viewer, and open a discussion for direct support.

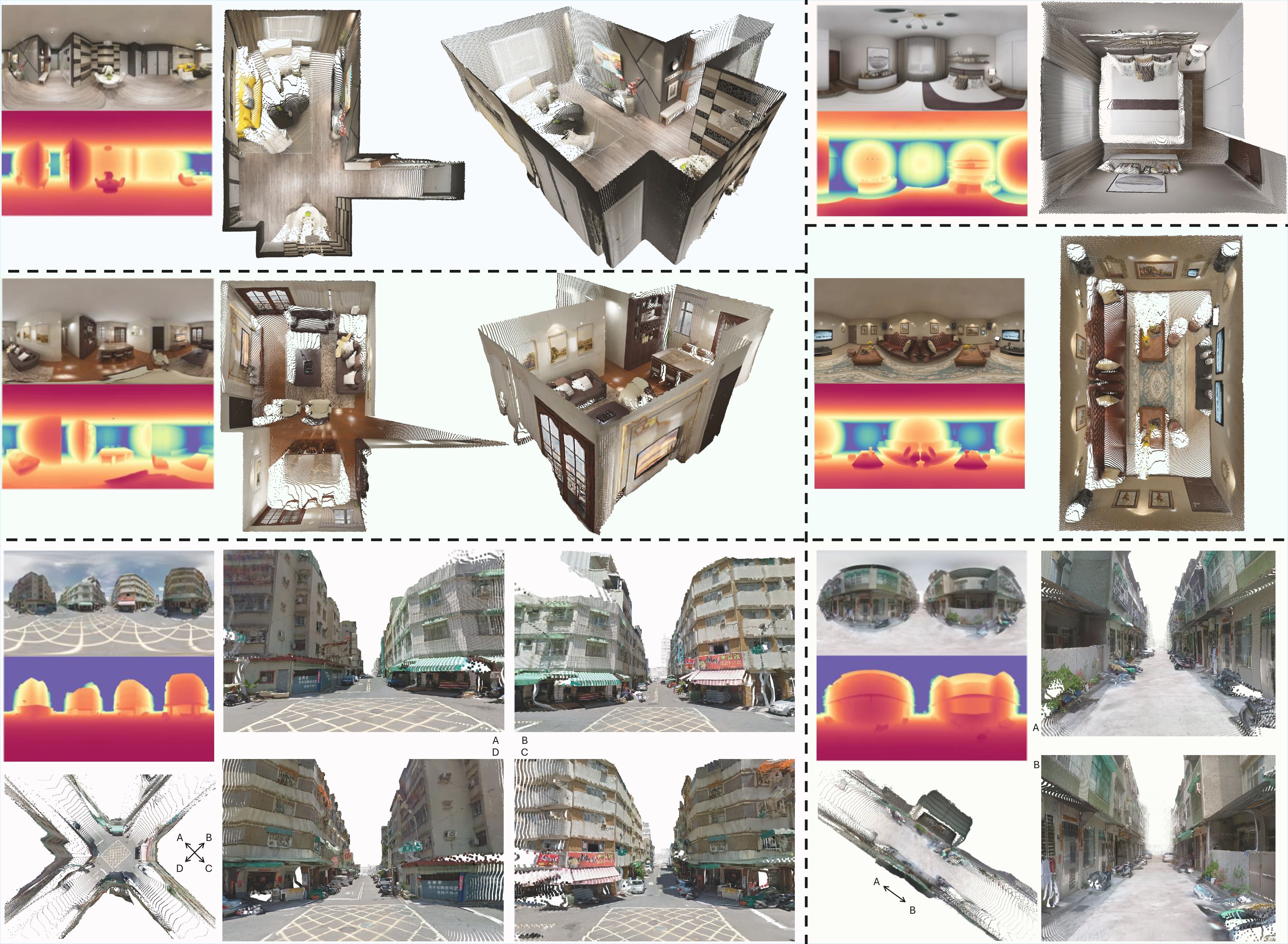

DA2: Depth Anything in Any Direction

DA2 predicts dense, scale-invariant distance from a single 360° panorama in an end-to-end manner, with remarkable geometric fidelity and strong zero-shot generalization.

⬇️ Download

- Download the datasets (please see here for the environment setup):

cd [YOUR_DATA_DIR]

huggingface-cli login

hf download --repo-type dataset haodongli/DA-2 --local-dir [YOUR_DATA_DIR]

- Merge parts into one

*.tar.gzfile:DATASET_NAMEin [hypersim_pano,vkitti_pano,mvs_synth_pano,unreal4k_pano,3d-ken-burns_pano,dynamic_replica_v2_pano]

cat [DATASET_NAME].tar.gz [DATASET_NAME]/part_*

- Check the

MD5:

md5sum -c [DATASET_NAME]_checksum.md5

- If correct, then we can unzip it:

tar -zxvf [DATASET_NAME].tar.gz

- The data samples will be exported in

[DATASET_NAME]/.

🎮 Usage

- The dietance values from the pixel to the 360° camera is stored in

depth.png. I also provideddepth_vis.pngjust for visualization. - Please refer the code below to load the depth values from

depth.png:

depth = cv2.imread('path/to/depth.png', cv2.IMREAD_UNCHANGED)

depth = depth.astype(np.float32)

depth = depth[:,:,0]

depth = depth * SCALE

depth = torch.from_numpy(depth)

- Please see the below table for the

SCALEof different curated dataset:Curated dataset Scale Hypersim 40.0 / 65535.0VKITTI, MVS-Synth, 3D-Ken-Burns 1.0 / 256.0UnrealStereo4K 80.0 / 65535.0DynamicReplica 20.0 / 65535.0 - The valid masks of the depth maps can be obtained via:

valid_mask = torch.logical_and(

(depth > 1e-5), (depth < 80.0)

).bool()

🎓 Citation

If you find these datasets useful, please consider citing 🌹:

@article{li2025depth,

title={DA $^{2}$: Depth Anything in Any Direction},

author={Li, Haodong and Zheng, Wangguangdong and He, Jing and Liu, Yuhao and Lin, Xin and Yang, Xin and Chen, Ying-Cong and Guo, Chunchao},

journal={arXiv preprint arXiv:2509.26618},

year={2025}

}

@inproceedings{roberts2021hypersim,

title={Hypersim: A photorealistic synthetic dataset for holistic indoor scene understanding},

author={Roberts, Mike and Ramapuram, Jason and Ranjan, Anurag and Kumar, Atulit and Bautista, Miguel Angel and Paczan, Nathan and Webb, Russ and Susskind, Joshua M},

booktitle={Proceedings of the IEEE/CVF international conference on computer vision},

pages={10912--10922},

year={2021}

}

@article{cabon2020virtual,

title={Virtual kitti 2},

author={Cabon, Yohann and Murray, Naila and Humenberger, Martin},

journal={arXiv preprint arXiv:2001.10773},

year={2020}

}

@inproceedings{huang2018deepmvs,

title={Deepmvs: Learning multi-view stereopsis},

author={Huang, Po-Han and Matzen, Kevin and Kopf, Johannes and Ahuja, Narendra and Huang, Jia-Bin},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={2821--2830},

year={2018}

}

@inproceedings{tosi2021smd,

title={Smd-nets: Stereo mixture density networks},

author={Tosi, Fabio and Liao, Yiyi and Schmitt, Carolin and Geiger, Andreas},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

pages={8942--8952},

year={2021}

}

@article{niklaus20193d,

title={3d ken burns effect from a single image},

author={Niklaus, Simon and Mai, Long and Yang, Jimei and Liu, Feng},

journal={ACM Transactions on Graphics (ToG)},

volume={38},

number={6},

pages={1--15},

year={2019},

publisher={ACM New York, NY, USA}

}

@inproceedings{karaev2023dynamicstereo,

title={Dynamicstereo: Consistent dynamic depth from stereo videos},

author={Karaev, Nikita and Rocco, Ignacio and Graham, Benjamin and Neverova, Natalia and Vedaldi, Andrea and Rupprecht, Christian},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={13229--13239},

year={2023}

}

- Downloads last month

- 565