---

license: cc-by-nc-nd-4.0

task_categories:

- audio-classification

language:

- zh

- en

tags:

- music

- art

pretty_name: GZ_IsoTech Dataset

size_categories:

- n<1K

dataset_info:

- config_name: default

features:

- name: audio

dtype:

audio:

sampling_rate: 44100

- name: mel

dtype: image

- name: label

dtype: int8

- name: name

dtype: string

- name: cname

dtype: string

- name: pinyin

dtype: string

splits:

- name: train

num_bytes: 1102596

num_examples: 2328

- name: test

num_bytes: 223896

num_examples: 496

download_size: 273681660

dataset_size: 1326492

- config_name: eval

features:

- name: mel

dtype: image

- name: cqt

dtype: image

- name: chroma

dtype: image

- name: label

dtype: int8

splits:

- name: train

num_bytes: 1560776

num_examples: 2389

- name: validation

num_bytes: 155960

num_examples: 253

- name: test

num_bytes: 158410

num_examples: 257

download_size: 249961089

dataset_size: 1875146

configs:

- config_name: default

data_files:

- split: train

path: default/train/data-*.arrow

- split: test

path: default/test/data-*.arrow

- config_name: eval

data_files:

- split: train

path: eval/train/data-*.arrow

- split: validation

path: eval/validation/data-*.arrow

- split: test

path: eval/test/data-*.arrow

---

# Dataset Card for GZ_IsoTech Dataset

## Original Content

The dataset is created and used for Guzheng playing technique detection by [[1]](https://archives.ismir.net/ismir2022/paper/000037.pdf). The original dataset comprises 2,824 variable-length audio clips showcasing various Guzheng playing techniques. Specifically, 2,328 clips were sourced from virtual sound banks, while 496 clips were performed by a professional Guzheng artist.

The clips are annotated in eight categories, with a Chinese pinyin and Chinese characters written in parentheses: _Vibrato (chanyin 颤音), Upward Portamento (shanghuayin 上滑音), Downward Portamento (xiahuayin 下滑音), Returning Portamento (huihuayin 回滑音), Glissando (guazou 刮奏, huazhi 花指...), Tremolo (yaozhi 摇指), Harmonic (fanyin 泛音), Plucks (gou 勾, da 打, mo 抹, tuo 托...)_.

## Integration

In the original dataset, the labels were represented by folder names, which provided Italian and Chinese pinyin labels. During the integration process, we added the corresponding Chinese character labels to ensure comprehensiveness. Lastly, after integration, the data structure has six columns: audio clip sampled at a rate of 44,100 Hz, mel spectrogram, numerical label, Italian label, Chinese character label, and Chinese pinyin label. The data number after integration remains at 2,824 with a total duration of 63.98 minutes. The average duration is 1.36 seconds.

Based on the aforementioned original dataset, we conducted data processing to construct the [default subset](#default-subset) of the current integrated version of the dataset. Due to the pre-existing split in the original dataset, wherein the data has been partitioned approximately in a 4:1 ratio for training and testing sets, we uphold the original data division approach for the default subset. The data structure of the default subset can be viewed in the [viewer](https://huggingface.co/datasets/ccmusic-database/CNPM/viewer). In addition, we have retained the [eval subset](#eval-subset) used in the experiment for easy replication.

## Default Subset Structure

| audio |

mel |

label |

cname |

| .wav, 44100Hz |

.jpg, 44100Hz |

8-class |

string |

### Data Instances

.zip(.flac, .csv)

### Data Fields

Categorization of the clips is based on the diverse playing techniques characteristic of the guzheng, the clips are divided into eight categories: Vibrato (chanyin), Upward Portamento (shanghuayin), Downward Portamento (xiahuayin), Returning Portamento (huihuayin), Glissando (guazou, huazhi), Tremolo (yaozhi), Harmonic (fanyin), Plucks (gou, da, mo, tuo…).

## Dataset Description

### Dataset Summary

Due to the pre-existing split in the raw dataset, wherein the data has been partitioned approximately in a 4:1 ratio for training and testing sets, we uphold the original data division approach. In contrast to utilizing platform-specific automated splitting mechanisms, we directly employ the pre-split data for subsequent integration steps.

### Statistics

|  |  |  |

| :---------------------------------------------------------------------------------------------------: | :-----------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------: |

| **Fig. 1** | **Fig. 2** | **Fig. 3** |

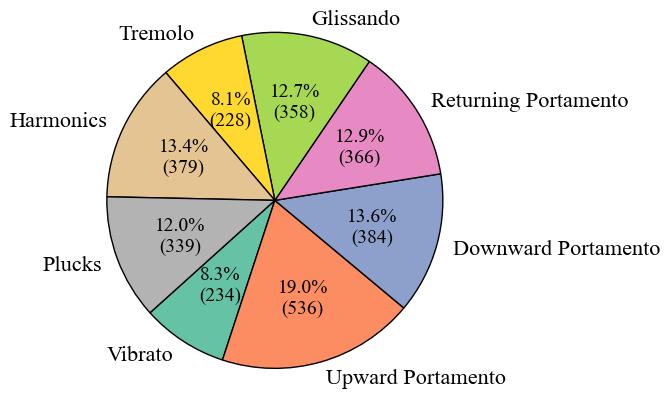

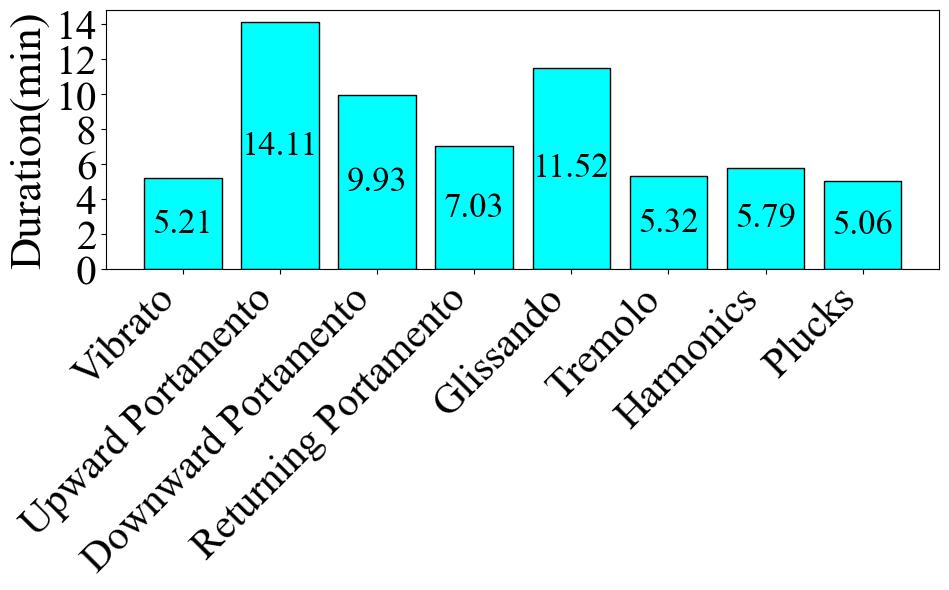

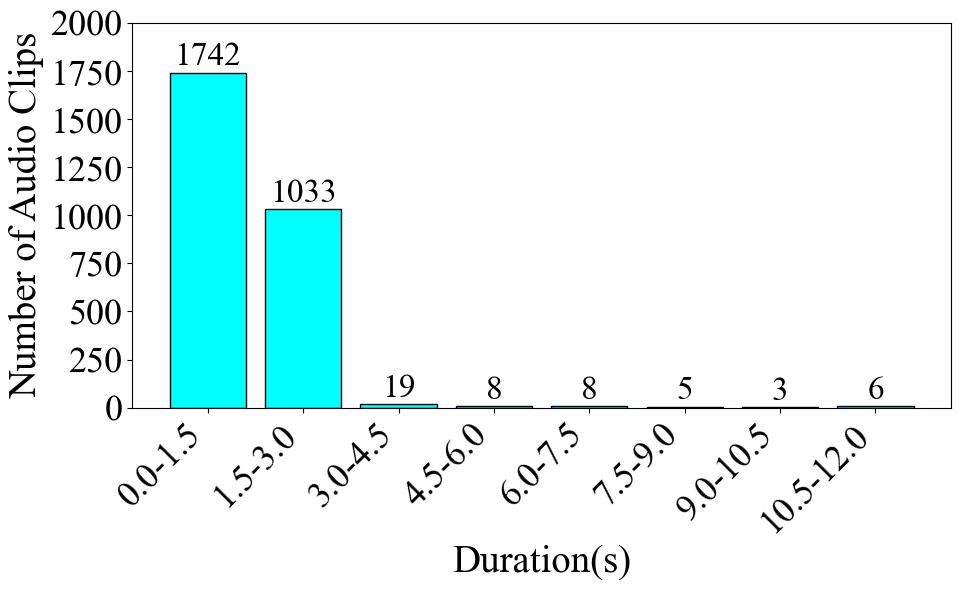

Firstly, **Fig. 1** illustrates the number and proportion of audio clips in each category. The category with the largest proportion is Upward Portamento, accounting for 19.0% with 536 clips. The smallest category is Tremolo, accounting for 8.1% with 228 clips. The difference in proportion between the largest and smallest categories is 10.9%. Next, **Fig. 2** displays the total audio duration for each category. The category with the longest total duration is Upward Portamento, with 14.11 minutes, consistent with the results shown in the pie chart. However, the category with the shortest total duration is Plucks, with a total of 5.06 minutes, which differs from the ranking in the pie chart. Overall, this dataset is comparatively balanced within the database. Finally, **Fig. 3** presents the number of audio clips distributed across specific duration intervals. The interval with the highest number of clips is 0-2 seconds. However, the number of audio clips decreases sharply for durations exceeding 4 seconds.

| Statistical items | Values |

| :-----------------: | :------------------: |

| Total count | `2824` |

| Total duration(s) | `3838.6787528344717` |

| Mean duration(s) | `1.359305507377644` |

| Min duration(s) | `0.3935827664399093` |

| Max duration(s) | `11.5` |

| Eval subset total | `2899` |

| Class with max durs | `Glissando` |

### Supported Tasks and Leaderboards

MIR, audio classification

### Languages

Chinese, English

## Usage

### Default subset

```python

from datasets import load_dataset

ds = load_dataset("ccmusic-database/GZ_IsoTech")

for item in ds["train"]:

print(item)

for item in ds["test"]:

print(item)

```

### Eval subset

```python

from datasets import load_dataset

ds = load_dataset("ccmusic-database/GZ_IsoTech", name="eval")

for item in ds["train"]:

print(item)

for item in ds["validation"]:

print(item)

for item in ds["test"]:

print(item)

```

## Maintenance

```bash

GIT_LFS_SKIP_SMUDGE=1 git clone git@hf.co:datasets/ccmusic-database/CNPM

cd CNPM

```

## Dataset Creation

### Curation Rationale

The Guzheng is a kind of traditional Chinese instrument with diverse playing techniques. Instrument playing techniques (IPT) play an important role in musical performance. However, most of the existing works for IPT detection show low efficiency for variable-length audio and do not assure generalization as they rely on a single sound bank for training and testing. In this study, we propose an end-to-end Guzheng playing technique detection system using Fully Convolutional Networks that can be applied to variable-length audio. Because each Guzheng playing technique is applied to a note, a dedicated onset detector is trained to divide an audio into several notes and its predictions are fused with frame-wise IPT predictions. During fusion, we add the IPT predictions frame by frame inside each note and get the IPT with the highest probability within each note as the final output of that note. We create a new dataset named GZ_IsoTech from multiple sound banks and real-world recordings for Guzheng performance analysis. Our approach achieves 87.97% in frame-level accuracy and 80.76% in note-level F1 score, outperforming existing works by a large margin, which indicates the effectiveness of our proposed method in IPT detection.

### Source Data

#### Initial Data Collection and Normalization

Dichucheng Li, Monan Zhou

#### Who are the source language producers?

Students from FD-LAMT

## Considerations for Using the Data

### Social Impact of Dataset

Promoting the development of the music AI industry

### Discussion of Biases

Only for Traditional Chinese Instruments

### Other Known Limitations

Insufficient sample

## Additional Information

### Dataset Curators

Dichucheng Li

### Evaluation

[1] [Li, Dichucheng, Yulun Wu, Qinyu Li, Jiahao Zhao, Yi Yu, Fan Xia and Wei Li. “Playing Technique Detection by Fusing Note Onset Information in Guzheng Performance.” International Society for Music Information Retrieval Conference (2022).](https://archives.ismir.net/ismir2022/paper/000037.pdf)

[2]

### Citation Information

```bibtex

@dataset{zhaorui_liu_2021_5676893,

author = {Monan Zhou, Shenyang Xu, Zhaorui Liu, Zhaowen Wang, Feng Yu, Wei Li and Baoqiang Han},

title = {CCMusic: an Open and Diverse Database for Chinese Music Information Retrieval Research},

month = {mar},

year = {2024},

publisher = {HuggingFace},

version = {1.2},

url = {https://huggingface.co/ccmusic-database}

}

```

### Contributions

Promoting the development of the music AI industry